Mobile Robotics Project – Thymio Navigation

Dec 7, 2023

- Grade: 5.75 / 6

TL;DR

- Built an autonomous navigation system for the Thymio robot.

- Integrated computer vision, path planning, motion control, local obstacle avoidance, and Kalman filter sensor fusion.

- Achieved robust trajectory following even under disturbances (kidnapping, goal relocation, camera loss).

- Graded 5.75 / 6 in the EPFL Mobile Robotics course.

At a glance

- Role: Robotics engineer (team of 3)

- Timeline: Oct–Dec 2023 (7 weeks)

- Context: EPFL Mobile Robotics course project

- Users/Stakeholders: Students, robotics researchers, course staff

- My scope: Vision system, Kalman filter integration, evaluation

Problem

Robots must navigate in dynamic, uncertain environments, where GPS may not constantly be available and sensors are noisy. Challenges we addressed:

- Localizing the robot robustly despite drift and noise.

- Planning safe global paths while adapting to sudden changes.

- Avoiding unseen obstacles in real time.

- Handling disturbances like robot kidnapping or camera failure.

The key question: How can a small mobile robot combine vision, planning, and sensor fusion to achieve reliable autonomy indoors?

Solution overview

We designed a modular navigation pipeline:

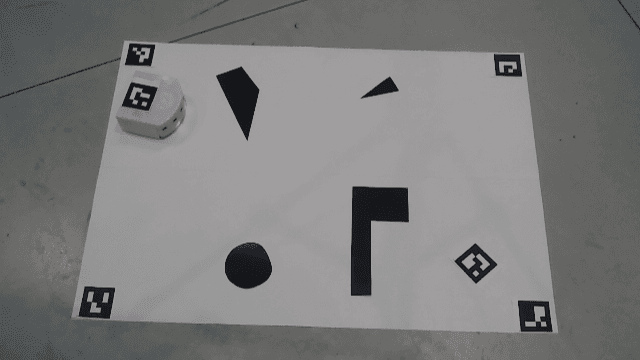

- Computer vision with ArUco markers for global localization (simulating GPS) and obstacle detection.

- Global planning using Dijkstra’s algorithm on a grid map created from vision.

- Motion control with heading/waypoint tracking.

- Local obstacle avoidance using proximity sensors.

- Sensor fusion with a Kalman filter for robustness.

Architecture

- Inputs: Camera feed + onboard odometry/proximity sensors.

- Modules: Vision → Path Planner → Motion Controller → Local Navigation → Kalman Filter.

- Outputs: Actuation commands to the Thymio (rotation, translation).

Data

- Inputs:

- Overhead camera stream with ArUco markers for robot, obstacles, and goals.

- Onboard proximity sensors for obstacle detection.

- Wheel odometry for dead-reckoning.

- Grid map: Discretized 2D environment built dynamically from vision.

- Disturbances: Robot displacement, goal teleportation, and temporary vision loss tested.

Method

1. Vision System

- ArUco detection for robot, goal, and obstacles.

- Preprocessing via grayscale + thresholding.

- 2D occupancy grid map generation.

- Real-time updates for dynamic environments.

2. Global Navigation

- Dijkstra’s algorithm for shortest path search.

- Waypoint discretization for smooth paths.

- Continuous replanning when environment or goal changes.

3. Motion Control

- Heading computed relative to next waypoint.

- Rotation + translation commands adapted to wrap-around angles.

- Smooth transitions between waypoints.

4. Local Navigation (Obstacle Avoidance)

- Proximity sensors detect obstacles unseen by vision.

- Behavior-based responses: stop, rotate, or detour.

- Local avoidance integrated seamlessly into global planning.

5. Kalman Filter (Sensor Fusion)

- Prediction step: wheel odometry.

- Update step: camera-based localization.

- Reduces noise, compensates drift, and smooths orientation jumps.

- Keeps predicting during camera outages.

Experiments & Results

- Trajectory following: Robot reached goals across cluttered maps with high reliability.

- Dynamic robustness: Recovered from kidnapping (manual relocation) and goal relocation.

- Camera loss: Kalman filter maintained stable pose estimates.

- Obstacle avoidance: Robot rerouted safely in real time when encountering unexpected obstacles.

Impact

- Demonstrated how vision + planning + sensor fusion enables robust autonomy on small robots.

- Created a demo for EPFL’s Mobile Robotics course.

What I learned

- Importance of modular system design in robotics.

- Hands-on experience with Kalman filtering and multi-sensor fusion.

- Trade-offs between global planning and local avoidance.

- Debugging challenges in real-time embedded robotics.

Future Work

- Add multi-robot coordination (swarm planning).

- Extend to larger maps with SLAM techniques.

- Improve motion control with PID or MPC for smoother trajectories.

- Introduce dynamic obstacle tracking via computer vision.

References

- EPFL Mobile Robotics course materials.

- OpenCV ArUco marker detection docs.

- Thymio robot official documentation.

- Thrun et al., Probabilistic Robotics.